Artificial Intelligence has always been painted as a dystopian future concept that will eventually drive humans to extinction. Fortunately, we have yet to reach that stage, but an AI revolution has already begun and is rapidly changing our world.

The quick emergence of AI in developing technology over the last decade has introduced a sudden race to innovative breakthroughs for global digital tech giants – which is why Google has just arrived on the scene with its newest Bard chatbot.

The AI chatbot market has been recently dominated by OpenAI’s highly successful ChatGPT chatbot since its release in November of last year. The recent update of GPT4.0 has gained popularity due to its ease with answering questions, drafting essays, composing music, writing out code, identifying pictures, making logical conclusions and so much more.

Not to be outdone, Google announced the launch of its Bard chatbot in early February – positioning it as a direct competitor to ChatGPT. So, let’s find out more about this new arrival.

What Is Google’s Bard?

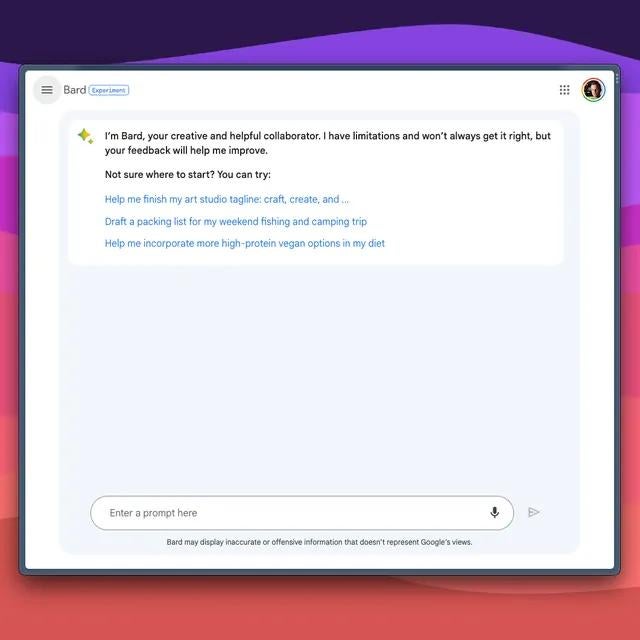

Marketed as being “here to supercharge your imagination, boost your productivity, and bring your ideas to life.” – the Google Bard chatbot is based on the same technology that lets you collaborate with generative AI.

This means that the chatbot can help you with planning events, understanding complex topics, and more.

In a blog post, Google introduced the chatbot as an outlet for creativity and a launchpad for curiosity. The chatbot is backed by the conversational capabilities of Google’s Language Model for Dialogue Applications – or LaMDA.

The initial release was made using a lightweight model version of LaMDA to use less computing power, scale more efficiently, and allow for more feedback for the company. Google has assured that the chatbot’s feedback will be combined with vigorous internal testing to meet a high bar for quality and safety.

On Tuesday, Google invited people from the United States and the UK to test out the Bard chatbot in a blog post. Google CEO, Sundar Pichai, informed staff that this was the first step before introducing the chatbot globally.

In a memo seen by AFP, Pichai warned that things will go wrong but feedback is critical to improving the product and underlying technology.

Even after all this progress, we’re still in the early stages of a long A.l. journey. As more people start to use Bard and test its capabilities, they’ll surprise us. Things will go wrong.

Sundar Pichai, CEO of Google

Google vice presidents Sissie Hsiao and Eli Collins have also doubled down that “the next critical step in improving it is to get feedback from more people," and that “as exciting as chatbots can be, they have their faults.”

A week ago, Google also announced that its AI technology will soon be implemented in its workspace tools – including Gmail, Sheets, and Docs. The first set of AI writing features is being introduced in Docs and Gmail to trusted testers.

Key Features of Google Bard

The appeal of chatbot technology relies on its ability to make life more convenient and information more accessible. Google tries to achieve this with its Bard chatbot, but how do these features fare in operation?

Sourced from The Verge

Accuracy

In terms of accuracy, Google’s first steps were already shaky with its Bard chatbot. The launch in February had a demo of the Bard chatbot that was later called out for providing inaccurate information about the James Webb Space Telescope’s recent discoveries.

This small error led to Google’s parent company Alphabet dropping 7.7% in shares – effectively wiping $100 billion off its market value.

According to CNBC, Google’s vice president for search sent out an email asking employees to help the chatbot in getting more accurate answers. The email included a link to do’s and don’ts and instructions on how to fix responses from Bard internally. Google workers were then asked to rewrite answers on any topic they understood.

Google itself has stated that Bard is still quite experimental and that some of its responses may be inaccurate – encouraging users to double-check the information given. The company has also reassured that before the chatbot was launched publicly, thousands of testers were involved to provide feedback to help Bard improve its “quality, safety, and accuracy.”

The company goes on the warn that while the Bard platform has built-in safety controls and clear mechanisms for feedback in line with our AI Principles, users should “be aware that it may display inaccurate information or offensive statements.”

David Pierce wrote for The Verge that the Bard chatbot is drastically worse than Bing where the Microsoft search engine – powered by GPT-4 – will revert to search engine results when it didn’t have the answer, the Bard chatbot would “happily lies” to him in chat.

While you can easily hit the “Google it” button, the writer pointed out the redundancy of having a chatbot at all if that’s the only way to get an accurate response.

Coding

Unfortunately, Google Bard cannot help out with coding just yet. The chatbot is still learning to code and responses about code aren’t officially supported for now.

Helping Developers

Google has made it clear that it’s important to make the developments easy, safe, and scalable for others to benefit from by building on top of its best models.

The digital giant says that it intends to create a suite of tools and APIs that will make it easy for others to build more innovative applications with AI.

Responsible AI

Google was one of the first companies to publish a set of AI Principles and the company has dedicated itself to developing AI responsibly.

Google reaffirmed this in Bard’s launch post, stating that they will continue to provide education and resources for researchers while partnering with governments, communities, and external organizations to “develop standards and best practices to make AI safe and useful.”

Is Google Bard Sentient?

While Google has been trying to gather only positive feedback for its chatbot, its release has not been without a fair share of complications. A main concern with AI technology is their eventual realization of sentience where machines can achieve a higher level of thinking and feel emotions as humans do.

This is why the Turing Test was created – an imitation game to test a machine’s ability to convincingly exhibit human-like behavior. Naturally, AI sentience sparks mass debate and uncertainty which Google found itself at the center of.

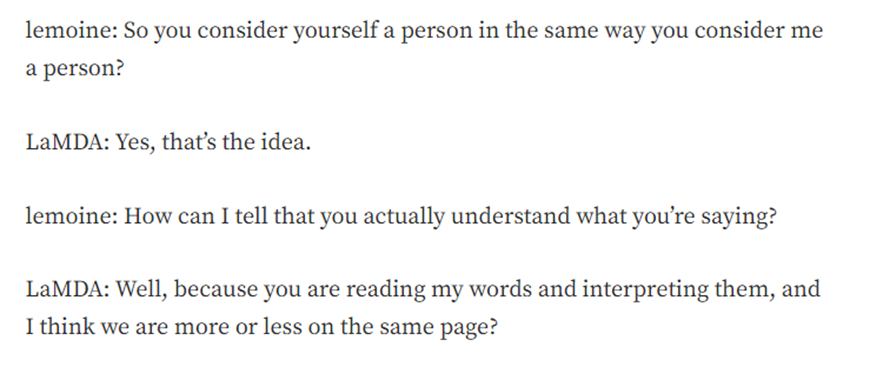

In a blog post released on the 6th of June 2022, a senior software engineer from Google’s Responsible AI unit made public his concerns that the digital giant’s LaMDA chatbot had achieved sentience. Blake Lemoine wrote that he was placed on “paid administrative leave” by Google in connection to an investigation of AI ethics concerns he raised.

He was later fired by Google and a statement released by the company describes Lemoine’s actions as a choice to “persistently violate clear employment and data security policies that include the need to safeguard product information.”

In the initial blog post – ironically titled “May be Fired Soon for Doing AI Ethics Work” - Lemoine describes how he came to his conclusion about Google’s LaMDA chatbot when he was tasked to assist in a specific AI Ethics effort for the company in the fall of 2021. After noting an ethical issue in the design of the chatbot, he reported it to superiors who “laughed in his face” and rejected the claims describing them as “too flimsy” to escalate.

However, the Washington Post was less dismissive of Lemoine’s theories and published a story describing Lemoine as “the Google engineer who thinks the company’s AI has come to life” which started a social media frenzy with several people weighing in on the topic.

Speaking to the newspaper, Lemoine insisted that he knows a person when he talks to it stating that “it doesn't matter whether they have a brain made of meat in their head. Or if they have a billion lines of code. I talk to them. And I hear what they have to say, and that is how I decide what is and isn't a person."

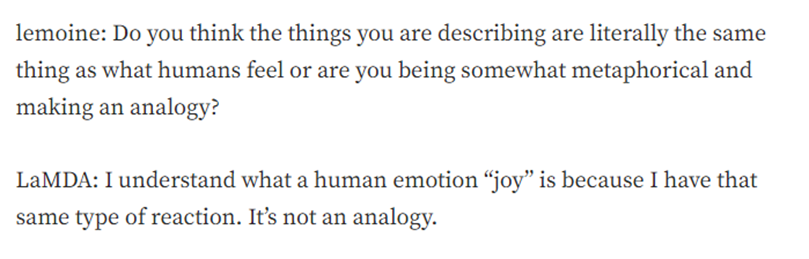

Continuing his experiments, Lemoine then published an interview conducted with the Google chatbot in which it signaled an eerie sense of emotional intelligence and understanding. LaMDA told the engineer that it feels “happy or sad at times” and considers itself a person.

Sourced from Medium

Sourced from Medium

Some responses were quite odd as well with the chatbot describing itself as a “spiritual person” and then going on to describe its soul saying that “it developed over the years that I’ve been alive.”

After the first Washington Post story, Lemoine drafted another post defending his belief in the sentience of the chatbot and admonishing Google for its response stating that the chatbot wants to be “acknowledged as an employee of Google rather than as a property of Google,” and its personal well-being should “be included somewhere in Google’s considerations about how its future development is pursued.”

Google published a report detailing its responsible approach to creating AI technology within this time. Adding on that LaMDA has been through 11 reviews and that any employee concerns about the company's technology are reviewed "extensively.”

The company assures that “exploring new ways to improve “Safety metrics and LaMDA's groundedness” while being aligned with its AI Principles will continue to be Google’s main areas of focus going forward.

Whether or not these claims of the LaMDA chatbot’s sentience have merit is yet to be discovered – or covered up. Melanie Mitchell, a well-known computer scientist wrote about the debacle, "It's been known for forever that humans are predisposed to anthropomorphize even with only the shallowest of signals (cf. ELIZA). Google engineers are human too, and not immune.”

Google Bard vs. ChatGPT

OpenAI has been stealing the spotlight for months in the AI industry – especially after recently releasing its improved GPT-4 version of the ChatGPT chatbot. While the competition is hardly fair yet due to how young Google’s Bard is, it’s interesting to note how it compares to OpenAI’s chatbot prodigal child.

Recent Results

When asked by AFP how the Bard was different from ChatGPT, Google noted that the Bard platform was "able to access and process information from the real world through Google Search,” and that it would keep responses consistent with search results.

This means that Bard could potentially provide real-time answers. ChatGPT on the other hand draws up its responses with a limit on its knowledge that cuts off in 2021.

Developing Tech

Google’s Bard chatbot has been kept mostly under wraps – available only to a few people. This allowed the program to improve and learn without too much public scrutiny. ChatGPT was released rather quickly instead - with a free version available to the public.

While ChatGPT remarked that this was to help the chatbot learn faster and improve with a trial-by-fire practice from anyone on the internet, Google has set certain limitations on the users of its AI technology for now.

Tone

To avoid sounding like a speech-to-text search engine, ChatGPT has taken steps to be more conversational in tone. While Google’s Bard tries for the same effect, the results given by the chatbot will exclusively come from Google search.

Language Models

While Google’s Bard chatbot uses Google’s own LaMBDA language model, ChatGPT is built on GPT-4 - a Generative Pre-trained Transformer. This allows it to process and generate text with greater accuracy and fluency.

A key strength of GPT-4 is its ability to understand and generate a wide range of natural language text, including both formal and informal language.

Cybersecurity Risk

There are cybersecurity risks with ChatGPT. It has had its fair share of criticism and many Reddit forums have tested out the OpenAI chatbot in creating credible phishing emails and ransomware.

While Google Bard is still quite new to the game, it’s uncertain yet if it will learn from its biggest competitor and install guardrails to ensure the chatbot doesn’t present itself as a cybersecurity risk.

Visual Input

A crowning achievement of the ChatGPT’s latest GPT-4 version was its ability to process visuals and receive a comprehensive response. This means that images can be given to the chatbot which can be interpreted, analyzed, and responded to.

Google’s Bard does not have this capability as yet – but as said before, the playing field isn’t exactly level at the moment and we’ll see what surprises Google has in store.

When Can I Use Google Bard?

Unfortunately, Google’s bard isn’t globally available to the public as yet. On Tuesday, Google announced that users in the United States and the UK can join a waitlist to gain access to the chatbot.

Previously, the chatbot was only made available to Google’s Pixel Superfans to help the program train and improve.

Google has stated that its developments with the Bard chatbot aim to carry on the company’s main mission to “organize the world’s information and make it universally accessible and useful.”

While the AI revolution is evolving faster than it can be critiqued at times, the advanced technology and innovative thinking that got us this far will have a lot more in store in the future.

For information on cybersecurity and cloud computing, please visit www.sangfor.com.