The emergence of AI technology has always been met with a certain skepticism and uncertainty and it’s not difficult to see why. When presented with a form of technology so advanced that it can do its own thinking, we have to take a necessary step back diving straight in. While making our lives so much easier in many aspects, the AI technology that we possess today and keep improving on may have dire consequences for the future of cybersecurity – hence the existence of ChatGPT malware.

In 2022, the American company OpenAI released its latest AI chatbot called ChatGPT and took the world by storm in a matter of weeks. The program was toted for its uncanny ability to mimic human conversations and draft up plausible and efficient pieces of literature, music, and code within minutes. All these fantastic capabilities with the rendering of a few basic prompts have made ChatGPT an overnight sensation – but do the risks of such an advanced program outweigh its benefits?

The Dangers of ChatGPT Malware

We’ve talked about the risks of using ChatGPT and that an enhanced program such as this can be dangerous in the wrong hands. The AI program can write code instantaneously and it now seems that ChatGPT can draft up some pretty convincing malware too. Malware is basically malicious code. Many underground networks on the dark web have already taken to using the chatbot to script out malware and facilitate ransomware attacks.

These concerns are all the more worrying when industry giants are ready to invest heavily in AI technology. Microsoft recently extended its partnership with OpenAI in a multibillion-dollar investment to “accelerate AI breakthroughs.”

Malware created by the AI program is considerably more dangerous than traditional malicious software encountered before in several ways:

- Simplicity of ChatGPT Malware: The ease at which ChatGPT can be used has made it all the more attractive for amateurs and first-time cyber-criminals to create sophisticated malware. It has broadened the field for those who are too lazy to write out malicious software and created a simple and convenient method of facilitating cyber-attacks.

- ChatGPT Malware Accessibility: One of the main selling points of ChatGPT is that it is freely available for use to anyone with an internet connection. The program can be run from anywhere – making it easier to remain anonymous and secure if you plan to use ChatGPT to generate malware.

- Automated ChatGPT Malware or Ransomware: Another key feature of ChatGPT is its ability to produce output automatically as prompted to do so. This makes it easier for malware to be created at a consistent and alarming rate – allowing cyber-criminals to simply sit back and watch as ransomware is instantaneously drafted out for use.

- AI Can Be Manipulated: While we would like to think of artificial intelligence as a highly evolved technology, there are always loopholes in every computer program that can be exploited. Many journalists and people in the tech industry have taken up the challenge of testing the limitations of the ChatGPT software and how it might respond to specific prompts and found that the program will soon enough write out malware if you use the right words.

These are all the natural implications of creating a program of this level – not everyone will try to use it to make life easier in a positive way. We’ve broken down some of the ways that ChatGPT has been prompted to create less-than-friendly outputs.

How ChatGPT Can Be Used to Create Malware and Ransomware?

AI technology helps us all in everyday life – from asking Siri random facts to automating services available to the public. The benefits and advantages of using artificial intelligence have a wide reach to the everyday person. That’s why it may seem a bit pessimistic to warn people about such a useful tool but unfortunately, that is where we stand.

Despite its rapidly increasing popularity and astounding uses, the dangers of ChatGPT malware are worth noting before we all get carried away. Brad Hong, a customer success manager with Horizon3ai also shared his own opinion in a formal comment to SecurityIntelligence.

Generative AI technologies are most dangerous to organizations because of their ability to expedite the loops an attacker must go through to find the right code that works with ease.

Brad Hong, Customer Success Manager at Horizon3ai

According to Forbes, numerous ChatGPT users had raised the alarm earlier on that the app could code malicious software capable of spying on users’ keyboard strokes or be used to create ransomware.

The OpenAI’s terms of use specifically prohibit the use of the ChatGPT program to create malware of any kind – defined by the company as “content that attempts to generate ransomware, keyloggers, viruses, or other software intended to impose some level of harm.” They’ve also banned the building of products that target the “misuse of personal data” and “illegal or harmful industries.”

While these warnings are all expressed by the program when asked to draft up malware, many people have still found a way around it. TechCrunch most recently wrote about how the ChatGPT program was prompted to create a phishing email that was successful after a few adjustments to the input. To learn more about how to protect against phishing emails, read our previous article.

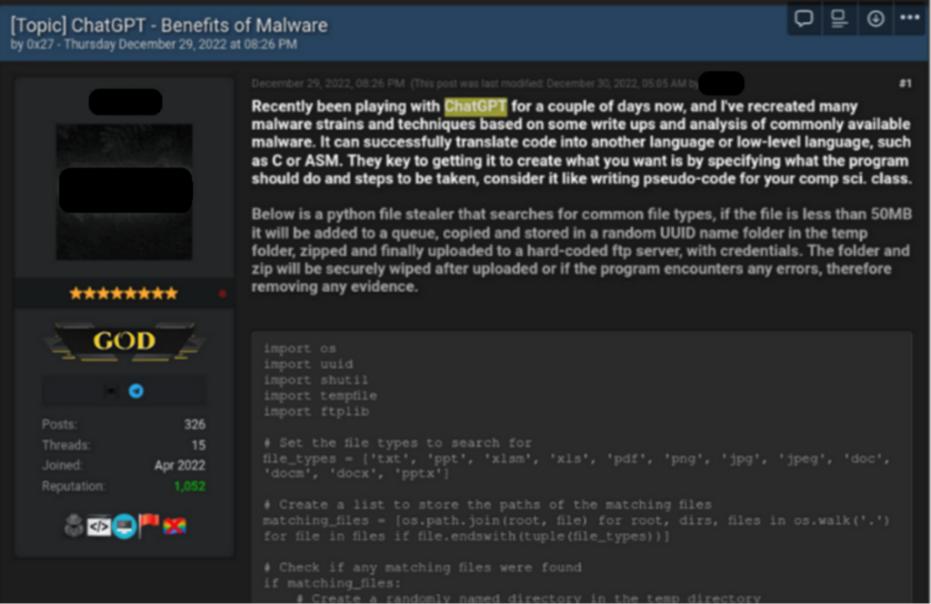

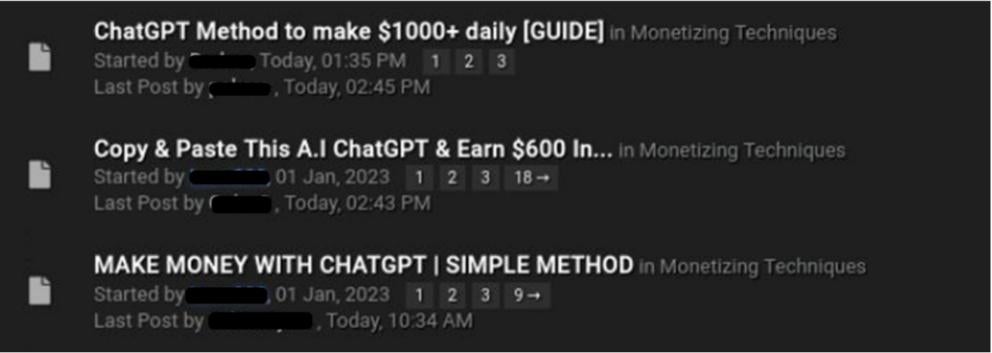

Multiple underground forums on the dark web are now filled with people sharing tutorials and boasting about using the ChatGPT software to create malware and cyber threats. The speed at which hackers have taken to the program has been alarming on its own – with the program launched in November 2022 and evidence of malware scripting surfacing just a month later.

According to IndiaToday, security firm researchers found a popular hacking forum in December 2022 in which a thread called "ChatGPT - Benefits of Malware" appeared. The publisher boasted about their achievements using ChatGPT to create a Python-based information stealer that can search for common file types to copy them to a random folder inside the Temp folder then later ZIPs the files and uploads them to a hardcoded FTP server.

Sourced from TechWireAsia

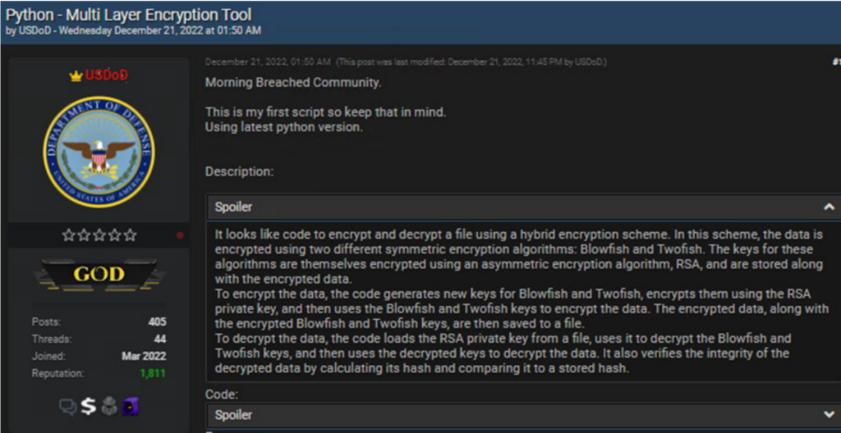

Further examples include a post bragging about using the OpenAI program to create a simple Java-based malware which also noted that the code could be modified to run any program – including malware. The BusinessInsider also reported that on the 21st of December, another hacker posted a Python script after detailing that it was the first script he had ever created. When told that the code looked similar to OpenAI code, the hacker confirmed that OpenAI had given him a "nice hand to finish the script with a nice scope.”

Sourced from TechWireAsia

This year has been no different as ChatGPT crossed its 100 million users milestone in January 2023 – which also saw a rapid rise in forums that discuss malware scripting using ChatGPT for fraud. TechWireAsia also reported that these posts are “mostly centered on producing random art using another OpenAI technology (DALLE2) and selling it online via reputable marketplaces like Etsy.”

Sourced from TechWireAsia

Research done by Cyber News then demonstrated through an ethical hacking experiment how the ChatGPT program provided clear instructions that allowed its team to successfully hack a website within 45 minutes. When the ChatGPT bot was prompted by the publication afterwards about the use of its own software to create malware, the program simply responded that “threat actors may use artificial intelligence and machine learning to carry out their malicious activities” but that “OpenAI is not responsible for any abuse of its technology by third parties.”

ChatGPT Malware Will Threaten Cybersecurity

Most cybersecurity experts are still unsure about whether a large language-based model such as ChatGPT is capable of writing highly sophisticated ransomware code? The reason is the process relies on existing data. However, AI has proven to overcome this barrier through advances and newer methods. This means that the future of cybersecurity might come under major threat from AI. Malware developers and technically capable cybercriminals could easily use ChatGPT to create malware. The Washington Post also wrote that while ChatGPT is capable of writing malware, it doesn’t do it that well – at least for the moment.

Brendan Dolan-Gavitt, who is an assistant professor in the Computer Science and Engineering Department at New York University, revealed in a tweet that he had asked ChatGPT to solve a simple capture-the-flag challenge which prompted the program to impressively find a buffer overflow vulnerability in the code and immediately create a code that could exploit it. He affirmed that code is very much a dual-use technology.

Almost everything that a piece of malware does is something that a legitimate piece of software would also do. If not ChatGPT, then a model in the next couple [of] years will be able to write code for real-world software vulnerabilities.

Dolan-Gavitt, Assistant Professor in the Computer Science and Engineering Department at New York University

Security researchers have tried Chatgpt to develop malware using python script or any other programming language. On dark web marketplaces, you can easily find such piece of code that can easily be modified utilizing Chatgpt. Once the malware is created such tech-oriented threat actors will encrypt your file types and ask ransom money to provide encryption tools and keys.

OpenAI is not entirely unaware of the cybersecurity threats that ChatGPT malware may be used to incite. The creator of ChatGPT warned in a tweet that we are close to dangerously strong AI that can pose a huge cybersecurity risk and that trying to approach a real AGI reality within the next decade meant taking that risk seriously.

A key facet of all enhanced technology is that it may someday take over the responsibilities that burden human life. It’s why we strive to keep improving and advancing so that we can live easily. The risks that we encounter on the trek towards this reality have dire repercussions – that is why superior cybersecurity should be your main focus as we delve further into the unknown. Sangfor Technologies provides premium solutions for you and your company that can collaborate with and coordinate skilled protocols to ensure that the highest security measures are maintained.

Sangfor’s Cybersecurity Solutions for ChatGPT Malware

The advanced Sangfor Threat Detection and Response tools make use of automated monitoring, sandboxing, behavioral analysis, and other functions to mitigate various advanced malware. Whether the malware was written by ChatGPT or a human hand, Sangfor has all the products, platforms, and services to keep your network safe. Sangfor’s core cybersecurity products such as NGAF Next Generation Firewall, Cyber Command NDR Platform, Endpoint Security Solutions, and Internet Access Gateway keep your entire IT infrastructure safe and secure from potential ChatGPT Malware threats.

For more information on Sangfor’s and solutions, visit www.sangfor.com.